Basic Descriptive Statistics in Python (Wisconsin Breast Cancer Dataset)

Max / 2021-03-14

Basic statistics

- Histogram

- Outliers

- Box Plot

- Summary Statistics

- Relationship Between Variables

- Correlation

- Covariance

- Pearson Correlation

- Spearman’s Rank Correlation

- Mean VS Median

- Hypothesis Testing

- Normal(Gaussian) Distribution and z-score

# import libraries

import pandas as pd

import numpy as np

import seaborn as sns

import matplotlib.pyplot as plt

from scipy import stats

plt.style.use("ggplot")

import warnings

warnings.filterwarnings("ignore")

from scipy import stats# read data as pandas data frame

data = pd.read_csv("/Users/max/Documents/Data Science/Projects/data/desc_stats_Tumor_data.csv")

data = data.drop(['Unnamed: 32','id'],axis = 1)# quick look to data

data.head()## diagnosis radius_mean ... symmetry_worst fractal_dimension_worst

## 0 M 17.99 ... 0.4601 0.11890

## 1 M 20.57 ... 0.2750 0.08902

## 2 M 19.69 ... 0.3613 0.08758

## 3 M 11.42 ... 0.6638 0.17300

## 4 M 20.29 ... 0.2364 0.07678

##

## [5 rows x 31 columns]data.shape # (569, 31)## (569, 31)data.columns ## Index(['diagnosis', 'radius_mean', 'texture_mean', 'perimeter_mean',

## 'area_mean', 'smoothness_mean', 'compactness_mean', 'concavity_mean',

## 'concave points_mean', 'symmetry_mean', 'fractal_dimension_mean',

## 'radius_se', 'texture_se', 'perimeter_se', 'area_se', 'smoothness_se',

## 'compactness_se', 'concavity_se', 'concave points_se', 'symmetry_se',

## 'fractal_dimension_se', 'radius_worst', 'texture_worst',

## 'perimeter_worst', 'area_worst', 'smoothness_worst',

## 'compactness_worst', 'concavity_worst', 'concave points_worst',

## 'symmetry_worst', 'fractal_dimension_worst'],

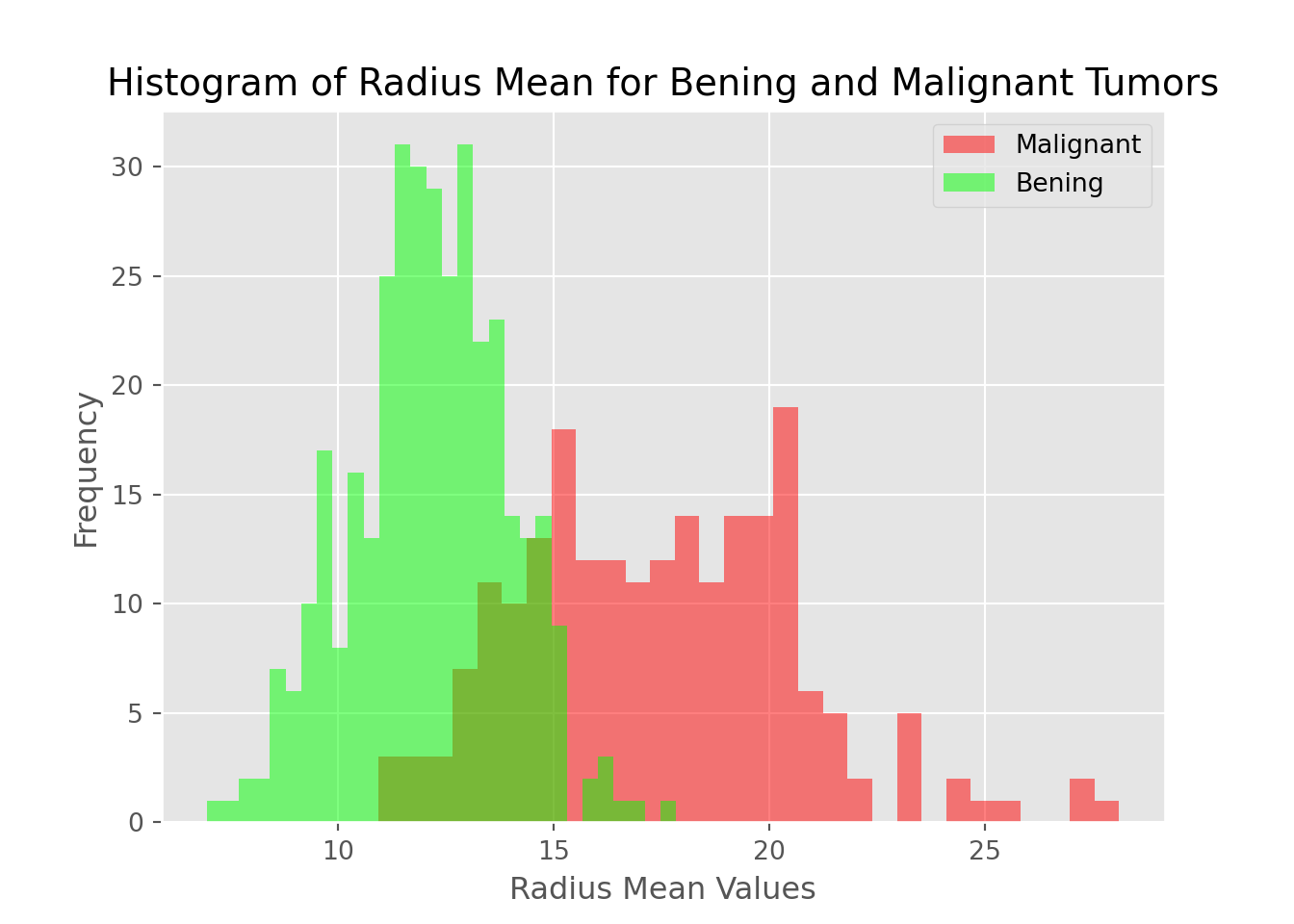

## dtype='object')Histogram

- How many times each value appears in dataset. This description is called the distribution of variable

- Frequency = number of times each value appears

- Most common way to represent distribution of varible is histogram that is graph which shows frequency of each value.

- Example: [1,1,1,1,2,2,2]. Frequency of 1 is four and frequency of 2 is three.

m = plt.hist(data[data["diagnosis"] == "M"].radius_mean,bins=30,fc = (1,0,0,0.5),label = "Malignant")

b = plt.hist(data[data["diagnosis"] == "B"].radius_mean,bins=30,fc = (0,1,0,0.5),label = "Bening")

plt.legend()## <matplotlib.legend.Legend object at 0x7f883ad95240>plt.xlabel("Radius Mean Values")## Text(0.5, 0, 'Radius Mean Values')plt.ylabel("Frequency")## Text(0, 0.5, 'Frequency')plt.title("Histogram of Radius Mean for Bening and Malignant Tumors")## Text(0.5, 1.0, 'Histogram of Radius Mean for Bening and Malignant Tumors')plt.show()

Conclusions

- Radius mean of malignant tumors are bigger than radius mean of bening tumors mostly

- The bening distribution (green in graph) is approcimately bell-shaped that is shape of normal distribution (gaussian distribution)

Outliers

- rare values in bening distribution (green graph)

- There values can be errors or rare events

- These errors and rare events are so called outliers

- Calculating outliers:

- calculate first quartile (Q1)(25%)

- find IQR(inter quartile range) = Q3-Q1

- compute Q1 - 1.5IQR and Q3 + 1.5IQR

data_bening = data[data["diagnosis"] == "B"]

data_malignant = data[data["diagnosis"] == "M"]

desc = data_bening.radius_mean.describe()

Q1 = desc[4]

Q3 = desc[6]

IQR = Q3-Q1

lower_bound = Q1 - 1.5*IQR

upper_bound = Q3 + 1.5*IQR

print("Anything outside this range is an outlier: (", lower_bound ,",", upper_bound,")")## Anything outside this range is an outlier: ( 7.645000000000001 , 16.805 )data_bening[data_bening.radius_mean < lower_bound].radius_mean## 101 6.981

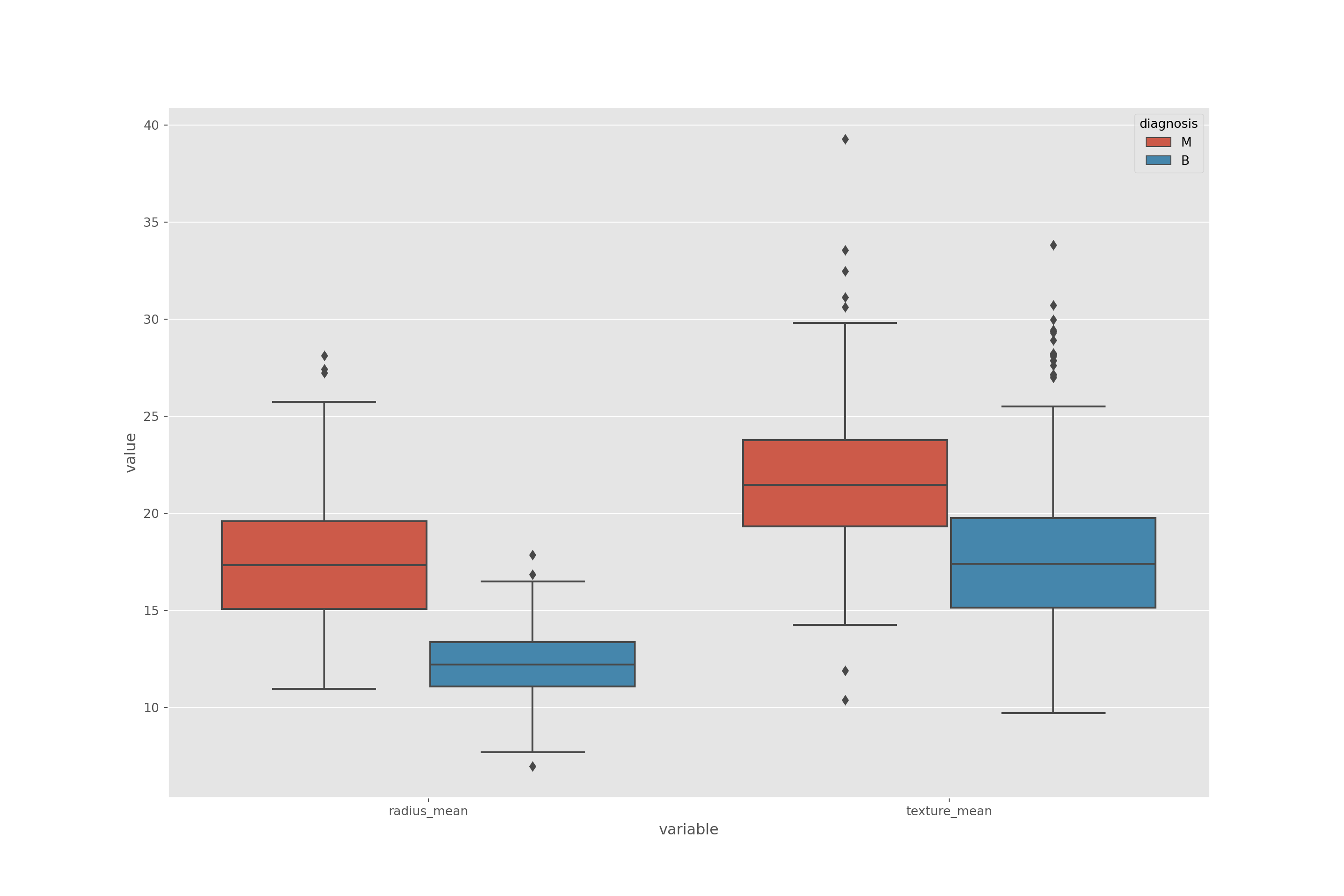

## Name: radius_mean, dtype: float64print("Outliers: ",data_bening[(data_bening.radius_mean < lower_bound) | (data_bening.radius_mean > upper_bound)].radius_mean.values)## Outliers: [ 6.981 16.84 17.85 ]Box Plot

- You can see outliers also from box plots

- We found 3 outlier in bening radius mean and in box plot there are 3 outlier.

melted_data = pd.melt(data,id_vars = "diagnosis",value_vars = ['radius_mean', 'texture_mean'])

plt.figure(figsize = (15,10))sns.boxplot(x = "variable", y = "value", hue="diagnosis",data= melted_data)## <AxesSubplot:xlabel='variable', ylabel='value'>plt.show()

Summary Statistics

- Mean

- Variance: spread of distribution

- Standart deviation square root of variance

- Lets look at summary statistics of bening tumor radiance mean

print("mean: ",data_bening.radius_mean.mean())## mean: 12.146523809523808print("variance: ",data_bening.radius_mean.var())## variance: 3.1702217220438738print("standart deviation (std): ",data_bening.radius_mean.std())## standart deviation (std): 1.7805116461410393print("describe method: ",data_bening.radius_mean.describe())## describe method: count 357.000000

## mean 12.146524

## std 1.780512

## min 6.981000

## 25% 11.080000

## 50% 12.200000

## 75% 13.370000

## max 17.850000

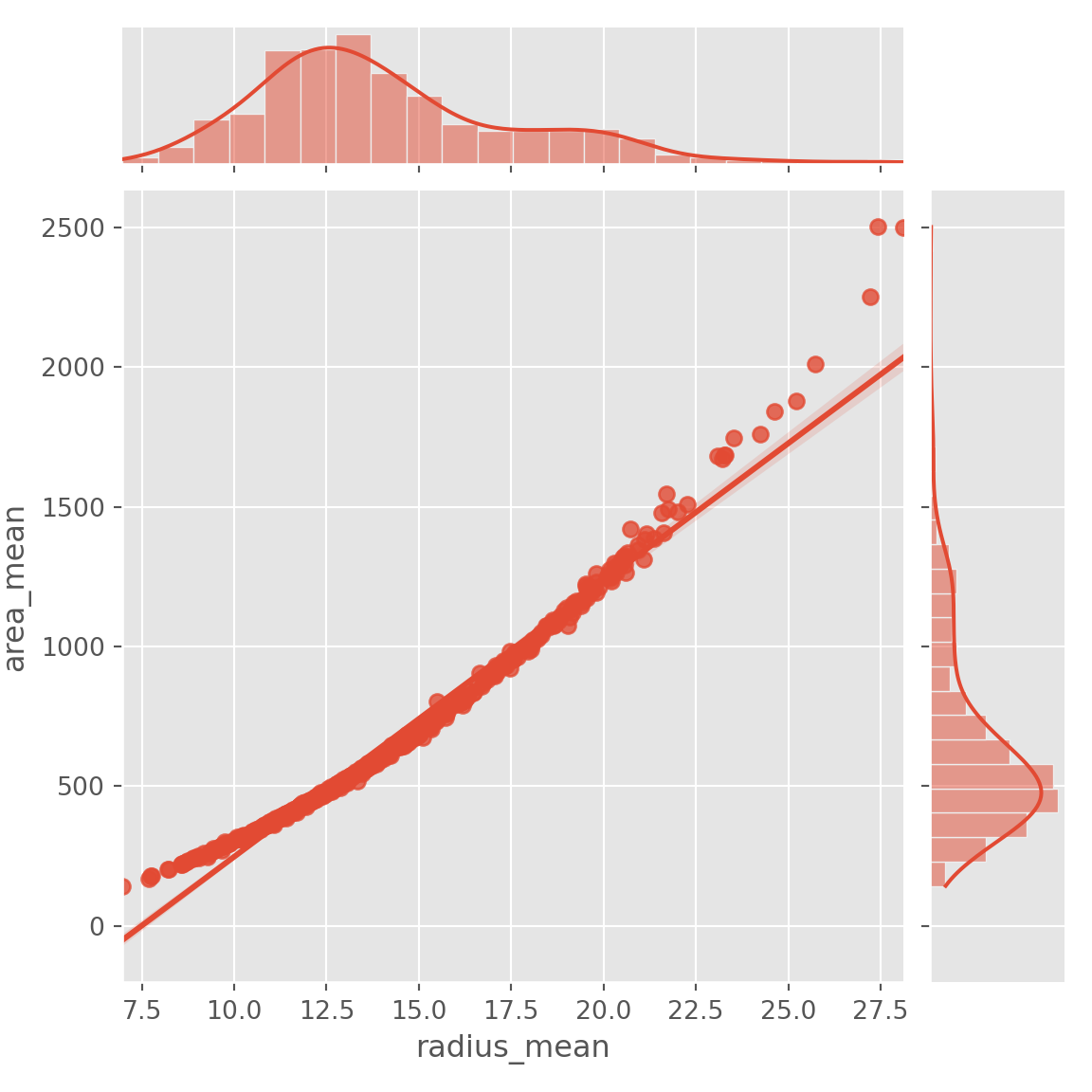

## Name: radius_mean, dtype: float64Relationship Between Variables

- We can say that two variables are related with each other, if one of them gives information about others

- For example, price and distance. If you go long distance with taxi you will pay more. There fore we can say that price and distance are positively related with each other.

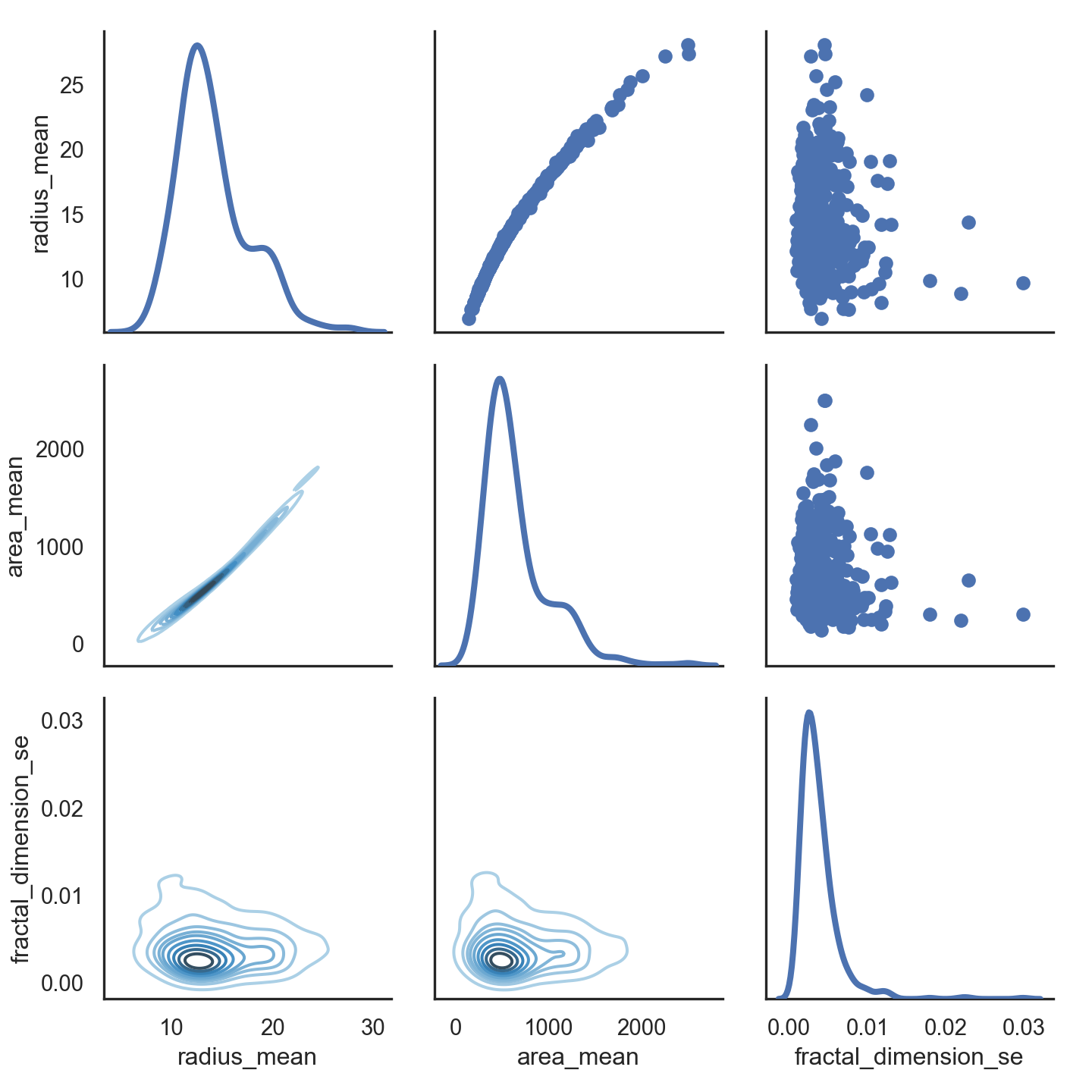

- Scatter Plot

- Simplest way to check relationship between two variables

- Lets look at relationship between radius mean and area mean

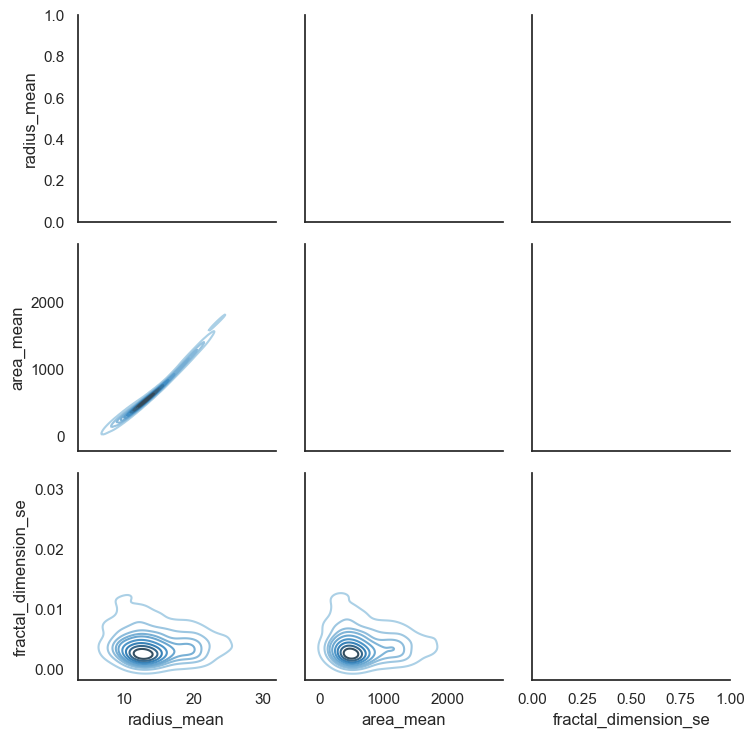

- In scatter plot you can see that when radius mean increases, area mean also increases. Therefore, they are positively correlated with each other.

- There is no correlation between area mean and fractal dimension se. Because when area mean changes, fractal dimension se is not affected by chance of area mean

plt.figure(figsize = (15,10))sns.jointplot(x= data.radius_mean, y= data.area_mean,kind="reg")## <seaborn.axisgrid.JointGrid object at 0x7f883c747780>plt.show()

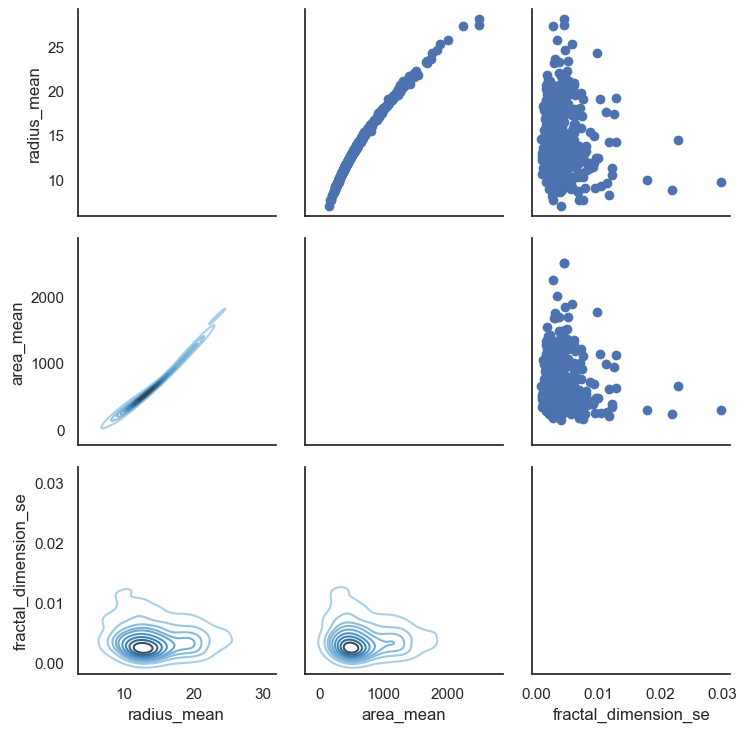

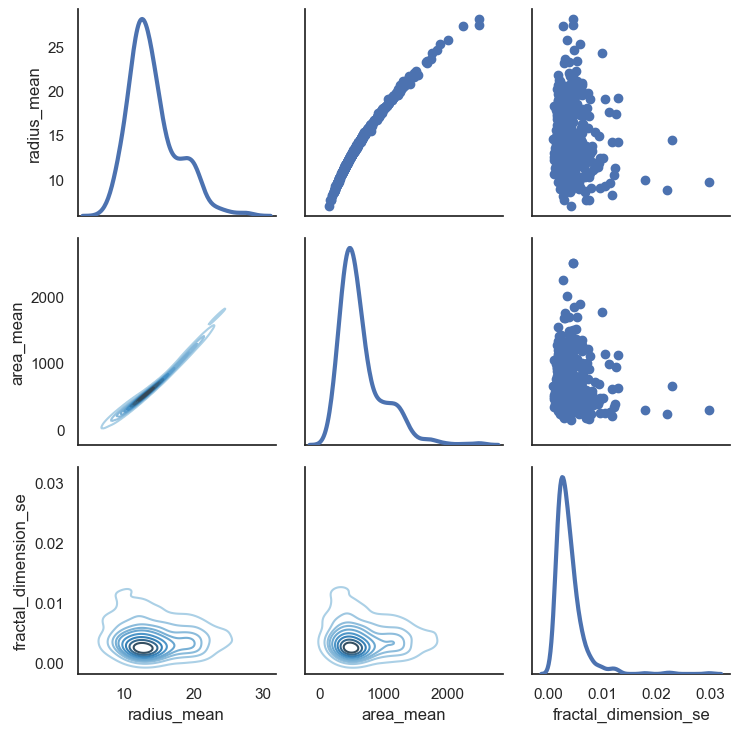

# Also we can look relationship between more than 2 distribution

sns.set(style = "white")

df = data.loc[:,["radius_mean","area_mean","fractal_dimension_se"]]

g = sns.PairGrid(df,diag_sharey = False,)

g.map_lower(sns.kdeplot,cmap="Blues_d")

g.map_upper(plt.scatter)

g.map_diag(sns.kdeplot,lw =3)

plt.show()

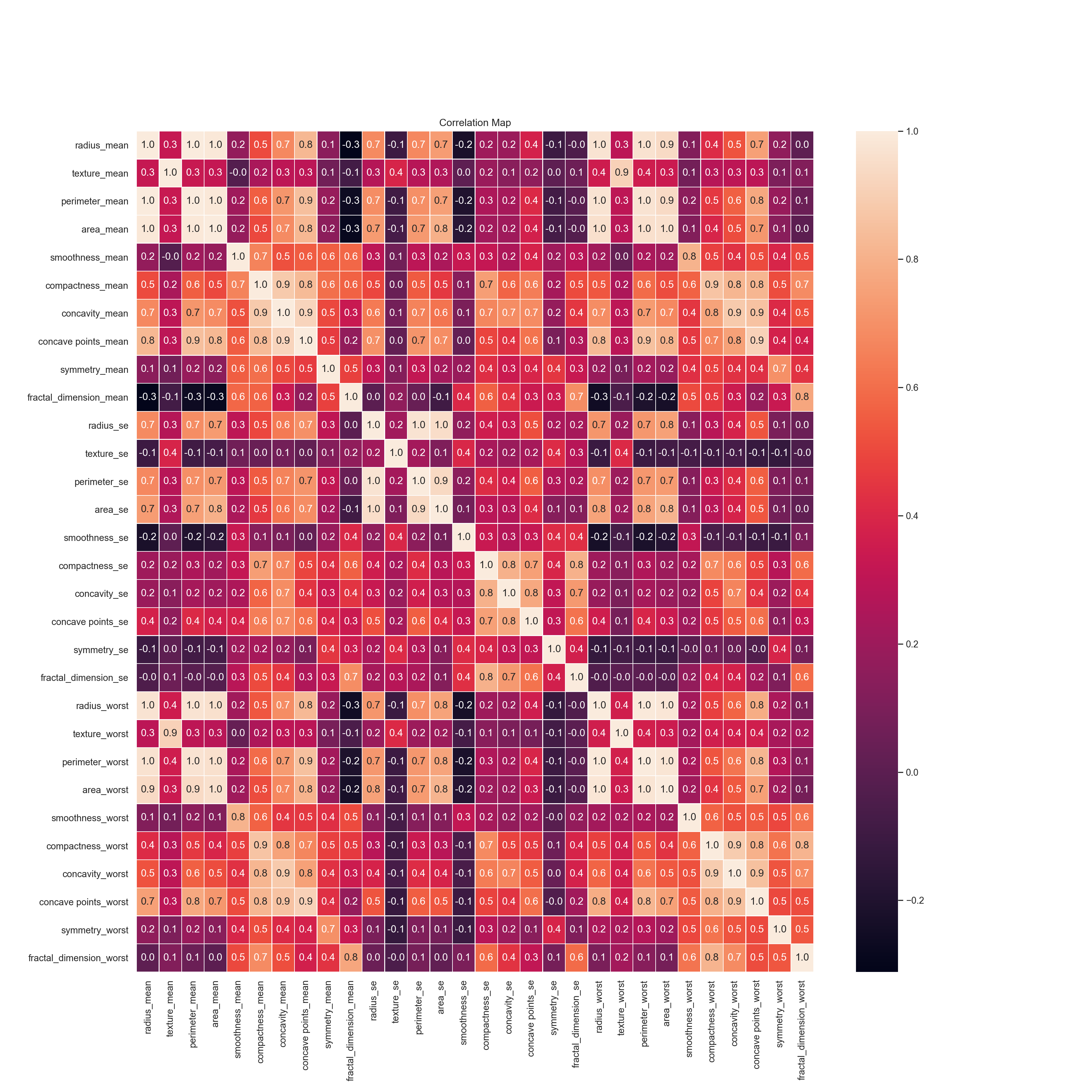

Correlation

- Strength of the relationship between two variables

- Lets look at correlation between all features.

f,ax=plt.subplots(figsize = (18,18))

sns.heatmap(data.corr(),annot= True,linewidths=0.5,fmt = ".1f",ax=ax)## <AxesSubplot:>plt.xticks(rotation=90)## (array([ 0.5, 1.5, 2.5, 3.5, 4.5, 5.5, 6.5, 7.5, 8.5, 9.5, 10.5,

## 11.5, 12.5, 13.5, 14.5, 15.5, 16.5, 17.5, 18.5, 19.5, 20.5, 21.5,

## 22.5, 23.5, 24.5, 25.5, 26.5, 27.5, 28.5, 29.5]), [Text(0.5, 0, 'radius_mean'), Text(1.5, 0, 'texture_mean'), Text(2.5, 0, 'perimeter_mean'), Text(3.5, 0, 'area_mean'), Text(4.5, 0, 'smoothness_mean'), Text(5.5, 0, 'compactness_mean'), Text(6.5, 0, 'concavity_mean'), Text(7.5, 0, 'concave points_mean'), Text(8.5, 0, 'symmetry_mean'), Text(9.5, 0, 'fractal_dimension_mean'), Text(10.5, 0, 'radius_se'), Text(11.5, 0, 'texture_se'), Text(12.5, 0, 'perimeter_se'), Text(13.5, 0, 'area_se'), Text(14.5, 0, 'smoothness_se'), Text(15.5, 0, 'compactness_se'), Text(16.5, 0, 'concavity_se'), Text(17.5, 0, 'concave points_se'), Text(18.5, 0, 'symmetry_se'), Text(19.5, 0, 'fractal_dimension_se'), Text(20.5, 0, 'radius_worst'), Text(21.5, 0, 'texture_worst'), Text(22.5, 0, 'perimeter_worst'), Text(23.5, 0, 'area_worst'), Text(24.5, 0, 'smoothness_worst'), Text(25.5, 0, 'compactness_worst'), Text(26.5, 0, 'concavity_worst'), Text(27.5, 0, 'concave points_worst'), Text(28.5, 0, 'symmetry_worst'), Text(29.5, 0, 'fractal_dimension_worst')])plt.yticks(rotation=0)## (array([ 0.5, 1.5, 2.5, 3.5, 4.5, 5.5, 6.5, 7.5, 8.5, 9.5, 10.5,

## 11.5, 12.5, 13.5, 14.5, 15.5, 16.5, 17.5, 18.5, 19.5, 20.5, 21.5,

## 22.5, 23.5, 24.5, 25.5, 26.5, 27.5, 28.5, 29.5]), [Text(0, 0.5, 'radius_mean'), Text(0, 1.5, 'texture_mean'), Text(0, 2.5, 'perimeter_mean'), Text(0, 3.5, 'area_mean'), Text(0, 4.5, 'smoothness_mean'), Text(0, 5.5, 'compactness_mean'), Text(0, 6.5, 'concavity_mean'), Text(0, 7.5, 'concave points_mean'), Text(0, 8.5, 'symmetry_mean'), Text(0, 9.5, 'fractal_dimension_mean'), Text(0, 10.5, 'radius_se'), Text(0, 11.5, 'texture_se'), Text(0, 12.5, 'perimeter_se'), Text(0, 13.5, 'area_se'), Text(0, 14.5, 'smoothness_se'), Text(0, 15.5, 'compactness_se'), Text(0, 16.5, 'concavity_se'), Text(0, 17.5, 'concave points_se'), Text(0, 18.5, 'symmetry_se'), Text(0, 19.5, 'fractal_dimension_se'), Text(0, 20.5, 'radius_worst'), Text(0, 21.5, 'texture_worst'), Text(0, 22.5, 'perimeter_worst'), Text(0, 23.5, 'area_worst'), Text(0, 24.5, 'smoothness_worst'), Text(0, 25.5, 'compactness_worst'), Text(0, 26.5, 'concavity_worst'), Text(0, 27.5, 'concave points_worst'), Text(0, 28.5, 'symmetry_worst'), Text(0, 29.5, 'fractal_dimension_worst')])plt.title('Correlation Map')## Text(0.5, 1.0, 'Correlation Map')plt.savefig('graph.png')

plt.show()

- Huge matrix that includes a lot of numbers

- The range of this numbers are -1 to 1.

- Meaning of 1 is two variable are positively correlated with each other like radius mean and area mean

- Meaning of zero is there is no correlation between variables like radius mean and fractal dimension se

- Meaning of -1 is two variables are negatively correlated with each other like radius mean and fractal dimension mean.Actually correlation between of them is not -1, it is -0.3 but the idea is that if sign of correlation is negative that means that there is negative correlation.

Covariance

- Covariance is measure of the tendency of two variables to vary together

- So covariance is maximized if two vectors are identical

- Covariance is zero if they are orthogonal.

- Covariance is negative if they point in opposite direction

- Lets look at covariance between radius mean and area mean. Then look at radius mean and fractal dimension se

np.cov(data.radius_mean,data.area_mean)## array([[1.24189201e+01, 1.22448341e+03],

## [1.22448341e+03, 1.23843554e+05]])print("Covariance between radius mean and area mean: ",data.radius_mean.cov(data.area_mean))## Covariance between radius mean and area mean: 1224.4834093464567print("Covariance between radius mean and fractal dimension se: ",data.radius_mean.cov(data.fractal_dimension_se))## Covariance between radius mean and fractal dimension se: -0.00039762485764406277Pearson Correlation

- Division of covariance by standart deviation of variables

- Lets look at pearson correlation between radius mean and area mean

- First lets use .corr() method that we used actually at correlation part. In correlation part we actually used pearson correlation :)

- p1 and p2 is the same. In p1 we use corr() method, in p2 we apply definition of pearson correlation (cov(A,B)/(std(A)*std(B)))

- As we expect pearson correlation between area_mean and area_mean is 1 that means that they are same distribution

- Also pearson correlation between area_mean and radius_mean is 0.98 that means that they are positively correlated with each other and relationship between of the is very high.

- To be more clear what we did at correlation part and pearson correlation part is same.

p1 = data.loc[:,["area_mean","radius_mean"]].corr(method= "pearson")

p2 = data.radius_mean.cov(data.area_mean)/(data.radius_mean.std()*data.area_mean.std())

print('Pearson correlation: ')## Pearson correlation:print(p1)## area_mean radius_mean

## area_mean 1.000000 0.987357

## radius_mean 0.987357 1.000000print('Pearson correlation: ',p2)## Pearson correlation: 0.9873571700566125Spearman’s Rank Correlation

- Pearson correlation works well if the relationship between variables are linear and variables are roughly normal. But it is not robust, if there are outliers

- To compute spearman’s correlation we need to compute rank of each value

ranked_data = data.rank()

spearman_corr = ranked_data.loc[:,["area_mean","radius_mean"]].corr(method= "pearson")

print("Spearman's correlation: ")## Spearman's correlation:print(spearman_corr)## area_mean radius_mean

## area_mean 1.000000 0.999602

## radius_mean 0.999602 1.000000- Spearman’s correlation is little higher than pearson correlation

- If relationship between distributions are non linear, spearman’s correlation tends to better estimate the strength of relationship

- Pearson correlation can be affected by outliers. Spearman’s correlation is more robust.

Mean VS Median

- Sometimes instead of mean we need to use median. I am going to explain why we need to use median with an example

- Lets think that there are 10 people who work in a company. Boss of the company will make raise in their salary if their mean of salary is smaller than 5

salary = [1,4,3,2,5,4,2,3,1,500]

print("Mean of salary: ",np.mean(salary))## Mean of salary: 52.5- Mean of salary is 52.5 so the boss thinks that oooo I gave a lot of salary to my employees. And do not makes raise in their salaries. However as you know this is not fair and 500(salary) is outlier for this salary list.

- Median avoids outliers

print("Median of salary: ",np.median(salary))## Median of salary: 3.0- Now median of the salary is 3 and it is less than 5 and employees will take raise in their sallaries and they are happy and this situation is fair :)

Hypothesis Testing

- Classical Hypothesis Testing

- We want to answer this question: “given a sample and a apparent effecti what is the probability of seeing such an effect by chance”

- The first step is to quantify the size of the apparent effect by choosing a test statistic. Natural choice for the test statistic is the difference in means between two groups.

- The second step is to define null hypothesis that is model of the system based on the assumption that the apparent effect is not real. A null hypothesis is a type of hypothesis used in statistics that proposes that no statistical significance exists in a set of given observations. The null hypothesis is a hypothesis which people tries to disprove it. Alternative hypothesis is a hypothesis which people want to tries to prove it.

- Third step is compute p-value that is probablity of seeing the apparent effect if the null hypothesis is true. Suppose we have null hypothesis test. Then we calculate p value. If p value is less than or equal to a threshold, we reject null hypothesis.

- If the p-value is low, the effect is said to be statistacally significant that means that it is unlikely to have occured by chance. Therefore we can say that the effect is more likely to appear in the larger population.

- Lets have an example. Null hypothesis: world is flatten. Alternative hypothesis: world is round. Several scientists set out to disprove the null hypothesis. This eventually led to the refection of the null hypothesis and acceptance of the alternative hypothesis.

- Other example. “this effect is real” this is null hypothesis. Based on that assumption we compute the probability of the apparent effect. That is the p-value. If p-value is low, we conclude that null hypothesis is unlikely to be true.

- Now lets make our example:

- I want to learn that are radius mean and area mean related with each other? My null hypothesis is that "relationship between radius mean and area mean is zero in tumor population’.

- Now we need to refute this null hypothesis in order to demonstrate that radius mean and area mean are related. (actually we know it from our previous experiences)

- lets find p-value (probability value)

statistic, p_value = stats.ttest_rel(data.radius_mean,data.area_mean)

print('p-value: ',p_value)## p-value: 1.5253492492559045e-184- P values is almost zero so we can reject null hypothesis.

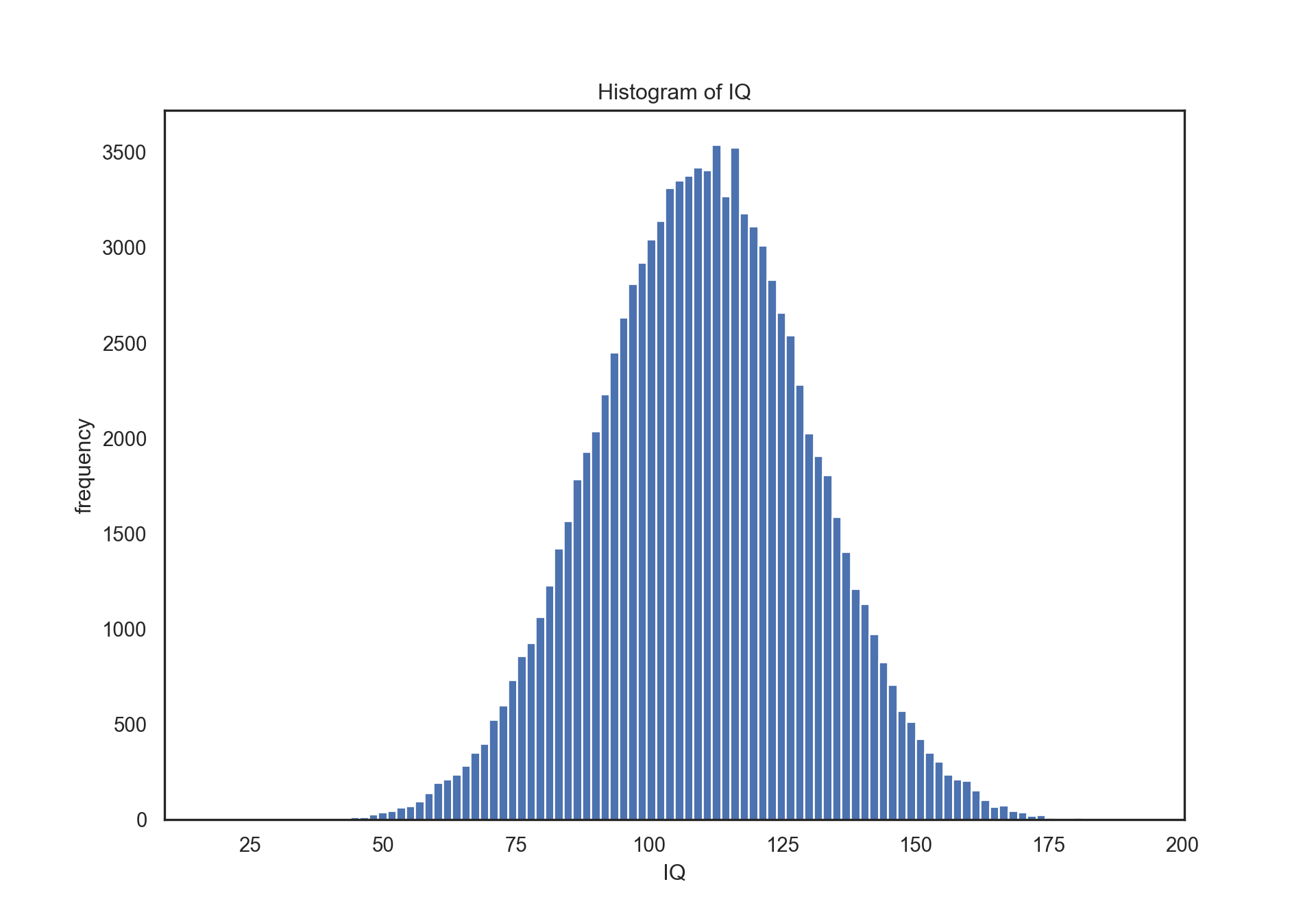

Normal(Gaussian) Distribution and z-score

- Also called bell shaped distribution

- Instead of making formal definition of gaussian distribution, I want to explain it with an example.

- The classic example is gaussian is IQ score.

- In the world lets say average IQ is 110.

- There are few people that are super intelligent and their IQs are higher than 110. It can be 140 or 150 but it is rare.

- Also there are few people that have low intelligent and their IQ is lower than 110. It can be 40 or 50 but it is rare.

- From these information we can say that mean of IQ is 110. And lets say standart deviation is 20.

- Mean and standart deviation is parameters of normal distribution.

- Lets create 100000 sample and visualize it with histogram.

# parameters of normal distribution

mu, sigma = 110, 20 # mean and standard deviation

s = np.random.normal(mu, sigma, 100000)

print("mean: ", np.mean(s))## mean: 109.99716925973718print("standart deviation: ", np.std(s))

# visualize with histogram## standart deviation: 20.039728430426035plt.figure(figsize = (10,7))plt.hist(s, 100)## (array([1.000e+00, 0.000e+00, 0.000e+00, 0.000e+00, 0.000e+00, 0.000e+00,

## 0.000e+00, 2.000e+00, 1.000e+00, 3.000e+00, 5.000e+00, 5.000e+00,

## 4.000e+00, 9.000e+00, 6.000e+00, 1.600e+01, 1.500e+01, 2.900e+01,

## 3.900e+01, 4.800e+01, 6.500e+01, 7.300e+01, 9.900e+01, 1.390e+02,

## 1.930e+02, 2.120e+02, 2.370e+02, 2.850e+02, 3.520e+02, 3.980e+02,

## 5.270e+02, 6.010e+02, 7.340e+02, 8.600e+02, 9.280e+02, 1.063e+03,

## 1.228e+03, 1.423e+03, 1.567e+03, 1.785e+03, 1.930e+03, 2.040e+03,

## 2.231e+03, 2.451e+03, 2.633e+03, 2.810e+03, 2.924e+03, 3.043e+03,

## 3.143e+03, 3.314e+03, 3.354e+03, 3.377e+03, 3.423e+03, 3.408e+03,

## 3.542e+03, 3.271e+03, 3.526e+03, 3.181e+03, 3.112e+03, 3.012e+03,

## 2.831e+03, 2.660e+03, 2.541e+03, 2.281e+03, 2.029e+03, 1.910e+03,

## 1.807e+03, 1.588e+03, 1.407e+03, 1.213e+03, 1.131e+03, 9.740e+02,

## 8.270e+02, 7.080e+02, 5.710e+02, 5.160e+02, 4.250e+02, 3.510e+02,

## 3.060e+02, 2.360e+02, 2.120e+02, 2.040e+02, 1.550e+02, 1.030e+02,

## 6.800e+01, 7.500e+01, 4.700e+01, 4.100e+01, 2.200e+01, 2.600e+01,

## 1.300e+01, 9.000e+00, 6.000e+00, 1.000e+01, 4.000e+00, 6.000e+00,

## 4.000e+00, 4.000e+00, 0.000e+00, 2.000e+00]), array([ 17.64082761, 19.38143728, 21.12204695, 22.86265662,

## 24.60326629, 26.34387596, 28.08448563, 29.8250953 ,

## 31.56570497, 33.30631464, 35.04692431, 36.78753398,

## 38.52814365, 40.26875332, 42.00936299, 43.74997266,

## 45.49058233, 47.231192 , 48.97180167, 50.71241134,

## 52.45302101, 54.19363068, 55.93424035, 57.67485002,

## 59.41545969, 61.15606936, 62.89667903, 64.6372887 ,

## 66.37789837, 68.11850804, 69.85911771, 71.59972738,

## 73.34033705, 75.08094672, 76.82155639, 78.56216606,

## 80.30277573, 82.0433854 , 83.78399508, 85.52460475,

## 87.26521442, 89.00582409, 90.74643376, 92.48704343,

## 94.2276531 , 95.96826277, 97.70887244, 99.44948211,

## 101.19009178, 102.93070145, 104.67131112, 106.41192079,

## 108.15253046, 109.89314013, 111.6337498 , 113.37435947,

## 115.11496914, 116.85557881, 118.59618848, 120.33679815,

## 122.07740782, 123.81801749, 125.55862716, 127.29923683,

## 129.0398465 , 130.78045617, 132.52106584, 134.26167551,

## 136.00228518, 137.74289485, 139.48350452, 141.22411419,

## 142.96472386, 144.70533353, 146.4459432 , 148.18655287,

## 149.92716254, 151.66777221, 153.40838188, 155.14899155,

## 156.88960122, 158.63021089, 160.37082056, 162.11143023,

## 163.8520399 , 165.59264957, 167.33325924, 169.07386891,

## 170.81447858, 172.55508825, 174.29569792, 176.03630759,

## 177.77691726, 179.51752693, 181.2581366 , 182.99874627,

## 184.73935594, 186.47996562, 188.22057529, 189.96118496,

## 191.70179463]), <BarContainer object of 100 artists>)plt.ylabel("frequency")## Text(0, 0.5, 'frequency')plt.xlabel("IQ")## Text(0.5, 0, 'IQ')plt.title("Histogram of IQ")## Text(0.5, 1.0, 'Histogram of IQ')plt.show()

As it can be seen from histogram most of the people are cumulated near to 110 that is mean of our normal distribution

However what is the “most” I mentioned at previous sentence? What if I want to know what percentage of people should have an IQ score between 80 and 140?

We will use z-score the answer this question. * z = (x - mean)/std * z1 = (80-110)/20 = -1.5 * z2 = (140-110)/20 = 1.5 * Distance between mean and 80 is 1.5std and distance between mean and 140 is 1.5std. * If you look at z table, you will see that 1.5std correspond to 0.4332

* Lets calculate it with 2 because 1 from 80 to mean and other from mean to 140

* 0.4332 * 2 = 0.8664

* 86.64 % of people has an IQ between 80 and 140.

* Lets calculate it with 2 because 1 from 80 to mean and other from mean to 140

* 0.4332 * 2 = 0.8664

* 86.64 % of people has an IQ between 80 and 140.

What percentage of people should have an IQ score less than 80?

z = (110-80)/20 = 1.5

Lets look at table of z score 0.4332. 43.32% of people has an IQ between 80 and mean(110).

If we subtract from 50% to 43.32%, we ca n find percentage of people have an IQ score less than 80.

50-43.32 = 6.68. As a result, 6.68% of people have an IQ score less than 80.